The (in)sanity of performance testing web applications

At some point in the life of a product which owners value the user’s experience, an organization ends up developing some kind of performance test. You’ll find them in different forms however. For example, a load test is used to observe the behavior of the system while simulating a well-known set of circumstances (users, usage scenarios, etc). A stress test on the other hand, tries to hit the system as hard as it can to see if and when it succumbs under the load. But whatever your goals are, before you start to put too much trust in the results, you need to understand the complexities of such a test. It’s fairly common to end up with results that don’t say anything about the real deal.

What are we measuring?

Imagine a web-based application which has been designed using modern front-end development techniques. Such a Single Page Application (SPA) typically uses an architecture where a majority of the application runs in the browser and relies on HTTP APIs to interact with server-side logic. That raised the question about what part should be included in the performance test.

An end-to-end test that uses the actual browser is quite unpractical. Developers who’ve been automating their application using Nightwatch, Selenium or Puppeteer know exactly what I’m talking about. As an alternative, you can write tests that directy act on the HTTP APIs and ignore the existance of the browser. If those APIs were designed to be used outside the application (and should not be treated as private), then they’re usually pretty stable. Having them covered by an automated performance test makes total sense and shouldn’t be that difficult to accomplish.

Requests vs scenarios

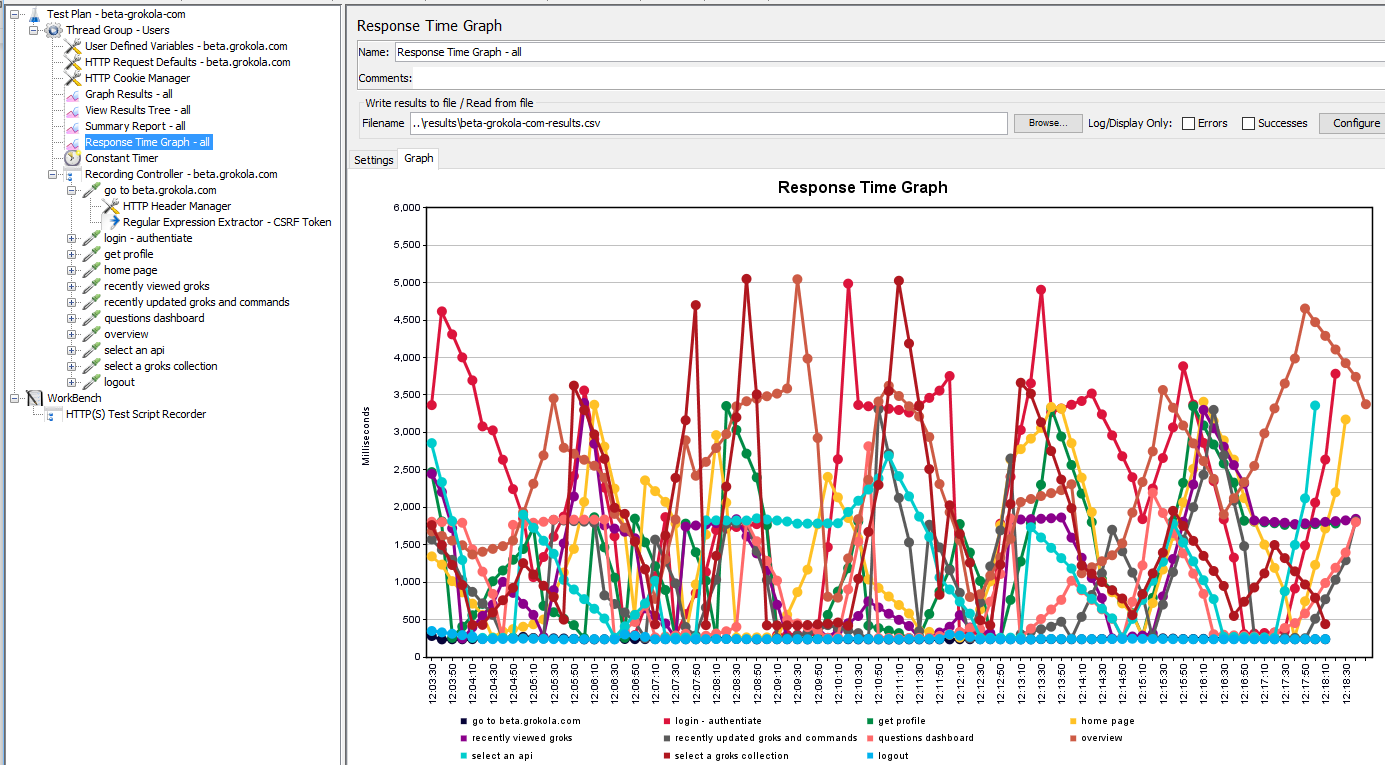

But a performance test which tries to give you insights in how the application will behave under the load of lots of users and under production-like circumstances is much less trivial. For instance, given a certain infrastructural setup and a given number of concurrent users, what are you going to be measuring? The average response time per HTTP request? The number of HTTP requests per second? I see why some people would suggest that, but ask yourself this question: is there a correlation between what an end-user is doing and what HTTP requests the browser will issue? Maybe for a specific version of your code base and maybe a specific configuration (if this concept exists), but this is very likely to change from version to version. Then how do you compare the response times between versions? Well, use functional transactions.

I first learned about this term from Andrei Chis, our resident QA engineer. Examples of functional transactions (FTs) are scenarios such as logging in, searching for documents based on specific filters, signing a digital document, or any other end-user scenario. The point is that you design those scenarios up-front, record or encode them in your performance test, and then use that as a base-line. So even if a newer version of your code-base changes the number and nature of the HTTP requests between the browser and the server, you can still compare the response time of that specific scenario between different versions. And since these scenarios are functional, it’ll be a whole lot easier to correlate a spike in performance to a specific part of the application.

Averages or something else?

Any performance test will execute the same scenarios over and over again, not just because of the duration of the test, but also because it’s supposed to simulate lots of concurrent users. To make some sense out of those results, you’ll need to aggregate those numbers into something that you can reason about. Taking the average response per functional transaction is a good starting point and will give you a quick overview on what part of your application is underperforming. However, when you use averages, very slow responses can be hidden by very fast responses. That’s why we prefer to include the the 90th percentile as well. If the 90th percentiles of the response times is 500ms, it means that 90% of all executed scenarios are faster or as fast as 500ms. Those two values should give you enough information to compare different versions of your code-base and quickly identify new performance bottlenecks. And if you need more granular results, check out this great article by the Dynatrace folks.

Are you realistic enough?

Although we now know what we’re going to be measuring, developing a performance test that simulates actual users is the next set of hurdles we’ll need to address. First of all, how well do you know how your users are interacting with your application? Unless you collect detailed run-time telemetry (which comes with a performance cost as well), you can only guess, resulting in test scenarios that are nothing more than assumptions. This gets worse if your application allows fine-grained configuration resulting in different (groups of) users causing the application to behave slightly different depending on what configuration a user is associated with.

Most teams I’ve run into use some kind of recording tool that records the test scenarios you execute in the browser and then allow you to group those in the earlier mentioned functional transactions, parameterize certain HTTP requests and apply any additional customizations. In a modern SPA, a lot of intelligence is embedded in the JavaScript code, including HTTP caching, Local Storage and the use of sophisticated data management libraries such as Redux or Reflux. So if you record whatever communication happens between the browser and server and use that for your performance test, you’re essentially using a snapshot of what really happens. Maybe that snapshot represents a very optimistic scenario where relatively few requests are made, but it could just as well be a very pessimistic scenario. Either way, you never know how realistic it is.

Another consequence of modern browsers and JavaScript code is that some user interaction can result in multiple (overlapping) HTTP requests to the server. Just try to open Facebook with the Chrome Developer Tools (F12) and notice how the different parts of the page get initialized through multiple concurrent HTTP requests. Now, I don’t know about other recording products, but JMeter assumes that each request is independent and will execute them sequentially during a test. So even if your page loads multiple images concurrently, it has no way to unambiguously tell whether those concurrent requests were intentional or not. If your load is sufficiently high, the large number of concurrent requests is probably going to make this less of an issue. But at least it’s something to consider.

Any modern application relies heavily on server-side and browser-provided caching, both of which are not unlikely to be associated by users or sessions. If such a cache is governed by specific users, you need to make sure that your test scenarios are tweaked to use unique users for each concurrent test scenario. If you don’t (and we’ve been bitten by this), you’re recording a session where a large portion of the requests either never happened because of HTTP caching, or respond unusually fast because the data was cached by the server. Sure, this’ll give you some nice performance results, but I highly doubt they give you enough realism.

Talking about concurrency. How do you define concurrent users in the first place? Is it the number of concurrent requests? Or are you simulating the number of concurrent users sitting behind a machine clicking through your application? And does this include thinking times? In fact, how well do you even know how many users are using your application and when? Is the load evenly distributed throughout the day or does your particular application have certain peak times? All questions you need to be able to answer to have a performance tests that makes sense.

What about the technical challenges?

Next to all the hard questions to achieve some realism, we’ve also faced quite some technical challenges which make performance testing a significant effort. For instance, suppose your application has protection against cross-site request forgery. For each GET request, the server will pass a secret token with the authentication cookie and keep this key in some sort of session state. Upon each successive PUT or DELETE request, the browser will need to pass that token through a form field or request header back to the server. Your performance tests will need to include the logic to extract the token from the authentication token and pass it in the form field. I can tell you that this is a not a trivial task.

Another common pitfall are side-effects caused by one user session doing something with shared data that the pre-recorded test scenario isn’t expecting. Typically those don’t happen when you do a single-user trial run, and may not even happen until you reach a certain number of concurrent users. When this happens, and if you’re lucky, your test runner may surface this immediately. But I’ve also seen tests where we needed to dig through megabytes of logging.

I also assume that most of your performance tests run in the cloud. In one of our projects, we heavily rely on Terraform and TeamCity to be able to run multiple performance tests in parallel using different sets of hardware configurations. However, if you don’t use physical hardware, you need to thoroughly understand the specifications and characteristics of the virtual machines you’re running on. For instance, most of the Amazon EC2 instance types from the t-series (e.g. t3.2xlarge) are general purpose machines that are burstable. In other words, for a limited time (based on so-called credits), they will increase the CPU power to handle peak workloads. So depending on how long those machines have running in your tests, you may see unexpected performance boosts in your results.

And what about applications that use federated identity techniques such as OIDC? The performance test needs to somehow replay the complicated orchestration of requests and exchange of tokens that happen between your main application and the server doing the authenticiation (the identity provider). This can quickly become very very complicated and probably not worth the effort. If switching back to simple forms authentication is an option, I would highly recommend doing so.

But… are they useful at all?

With everything I said before, you might start to wonder whether you should even bother investing in performance tests. However, it all depends on your expectations. If you expect to be able to simulate your production performance, you’re going to need to mirror your production hardware very closely and heavily invest in collecting run-time telemetry. If you don’t do both, either your results will be off or you’ll be measuring the wrong thing.

But if you use them as a base-line for comparing the performance between releases, the effects of specific technical improvements or to understand the behavior of running a system on different types of hardware configurations, they are extremely valuable. And even though the results will not be comparable with a production environment, it may surface bottlenecks pretty quickly. This has been so valuable for us, that we’re in the process of letting them run on a weekly basis and soon will allow a developer to even run a performance test on a pull request by simply trigger a Team City build configuration backed by AWS and Terraform. How cool is that?

So what do you think? Have you been successful with performance tests? Do you have any tips or tricks to share that can help us all? Or did you just give up on performance testing? I would love to hear your thoughts by commenting below. Oh, and follow me at @ddoomen to get regular updates on my everlasting quest for better solutions.

Aviva Solutions

Aviva Solutions

Fluent Assertions

Fluent Assertions

Leave a Comment