Profiling legacy code using characterization tests

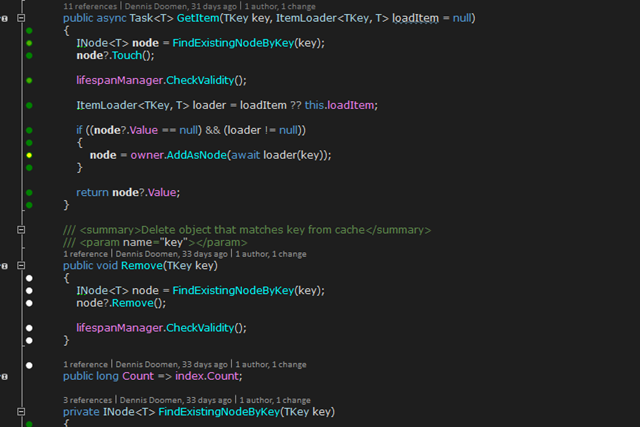

As you might have read, I've been refactoring some example code for a multi-threaded cache that I got from CodeProject into a source-only NuGet package which will soon be published as FluidCaching. Since this cache has been built to be very performant, the internal algorithms are not trivial to grasp. The fact that the code doesn't meet any coding conventions, doesn't have any unit tests and clearly violates all SOLID principles, doesn't help either. My intention was to do some serious internal refactoring and getting rid of custom-built thread-synchronization primitives that can be replaced with built-in .NET framework classes.

But how can I do that without breaking any of the existing functionality? Without unit tests, there's no safeguard that protects me from breaking things. Heck, the existing code might even contain bugs (and it did). Sure, I've used the original code in a RavenDB spike, but that doesn't prove it will work correctly under all circumstances. A couple of years ago, Michael Feathers wrote a brilliant book full of strategies for dealing with legacy code. I don't recall his exact definition for 'legacy code', but by my definition, legacy code is every line of code that isn't covered by some automated test, isn't governed by a well-defined coding conventions, and has been designed according to accepted object-oriented design techniques such as Clean Code and SOLID.

One of the many techniques he discusses in his book is to take an existing code base and try to write a couple of automated end-to-end tests that set-up the appropriate initial state and exercise the API to observe the results. Depending on the complexity of that code base, you might not even be able to predict the outcome of the test. So just run those test cases, observe the outcome and finalize the tests by adding the necessary code to assert that the next run gives you the same results. When I did this for FluidCaching, the mere act of tweaking the input parameters and observing the outcome gave me great insight in the underlying caching algorithm. By encoding those combinations as unit tests, I was gradually building what Michael coined characterization tests. In a way, you're building a profile of that code base in a similar fashion as FBI Profilers build a profile of serial killers.

When your characterization tests cover enough of the code base (and a code coverage tool like NCrunch can really help you here), you’re ready for the next steps. You can start refactoring the internal implementation while relying on the safety net provided by your tests. Or you can start isolating external dependencies like databases, file systems or other dependencies like web services or proprietary integration points. Without these, your tests will run much faster and allow you to replace expensive set-up code with more focused and readable test data builders or test doubles. Jeremy D. Miller used to refer to this approach as Isolate The Ugly Stuff. This is an important step to more isolation. More isolation leads to more control, less fear and a faster development cycle, which allows for more aggressive refactoring.

If you're far enough that the code base doesn't rely on expensive external dependencies, it's time to get rid of any static mutable state. In .NET, the use of DateTime.Now is a great example of that. Using thread-static class fields is another. This may sound like a decent idea, but just try to run your tests in parallel (like XUnit does out-of-the-box). Chances are that your tests start to fail in a non-deterministic way. Even the Service Locator pattern promoted by Microsoft's Enterprise Library is a bad example. Obviously, all these little refactorings should open up the possibility for introducing more well-written unit tests that would keep you out of the debugger hell. Just be careful to think deeply about the scope of a unit and consider these tips and tricks while you're on it. When you have enough unit tests in place and you feel confident that you have control of your code base, you can consider getting rid of those ugly and unmaintainable characterization tests. However, I would recommend to keep a few around as integration or subcutaneous tests, provided they are easy enough to understand in case they fail.

So what do you think? Did you go through a similar exercise before? I'd love to hear about those experiences by commenting below. Oh, and please follow me at @ddoomen to get regular updates on my everlasting quest for better solutions.

Aviva Solutions

Aviva Solutions

Fluent Assertions

Fluent Assertions

Leave a Comment